An in-depth comparison of Keras, PyTorch, and several others.

As deep learning has grown in popularity over the last two decades, more and more companies and developers have created frameworks to make deep learning more accessible. Now there are so many deep learning frameworks available that the average deep learning practitioner probably isn’t even aware of all of them. With so many options available, which framework should you pick?

In this article, I will give you a tour of some of the most common Python deep learning frameworks and compare them in a way that allows you to decide which framework is the right one to use in your projects.

TensorFlow/Keras

https://www.tensorflow.org/https://www.tensorflow.org/

I have purposely bundled these two frameworks together because the latest versions of TensorFlow are tightly integrated with Keras. Keras serves as a high-level programming interface that uses TensorFlow as a backend. This means that if you use Keras, you are effectively using TensorFlow as well.

TensorFlow was released in 2015 by the Google Brain Team and is arguably the most popular deep learning framework as of 2020. However, because of the steep learning curve involved in the graph programming model for TensorFlow, a researcher at Google named François Chollet created Keras to provide a more intuitive and high-level interface for interacting with TensorFlow.

Features

- A high-level, and simple API (Keras) that is beginner-friendly.

- Support for training on GPUs and TPUs (Tensor Processing Units).

- Support for multi-GPU and distributed training.

- Flexibility when it comes to deploying models.

- A wide range of pre-trained models made available via Keras Applications.

- The ability to improvise for research by creating custom Keras layers or by directly accessing the TensorFlow backend via Keras.

- The ability to automatically find the best model for a dataset using AutoKeras.

- There are TensorFlow APIs available for Python, Javascript, C++, Java, and Go.

It’s very clear from the list above that Keras is easy to use, yet scalable and flexible enough to solve a wide range of deep learning problems.

Advantages

- Keras is extremely flexible and the API is easy to use.

- With the latest versions of TensorFlow, you can have the control and freedom to improvise that TensorFlow offers along with the high-level interface of Keras.

- Keras is scalable and you can take advantage of the processing power of distributed environments or machines with multiple GPUs.

- AutoKeras is like AutoML for deep learning and takes the guesswork out of the hyperparameter tuning and architecture search process for certain tasks.

- TensorFlow has APIs for several languages.

Disadvantages

- TensorFlow APIs in languages other than Python are not necessarily backward-compatible and are not covered by the API stability promises that apply to the Python API.

- Keras is a little slower than some of the other frameworks.

PyTorch

PyTorch is a deep learning framework that was created and initially released by Facebook AI Research (FAIR) in 2016. It is similar to Keras but has a more complex API, as well as interfaces for Python, Java, and C++. Interestingly, several modern deep learning software products were created using PyTorch such as Tesla Autopilot and Uber’s Pyro.

Features

- An API that is a bit more complex than Keras, but still somewhat easy to use.

- Support for training on GPUs and TPUs.

- Support for distributed training and multi-GPU models.

- APIs are available for Python, Java, and C++.

- PyTorch is part of a much larger ecosystem for tools that are built on top of it.

- Auto-PyTorch provides automatic architecture searching and hyperparameter tuning for a limited range of tasks.

- Like Keras, PyTorch has pre-trained models available in TorchVision.

- PyTorch allows you to improvise by extending the neural network classes that it provides.

Advantages

- PyTorch offers flexibility when it comes to creating your own neural network architectures for research.

- Like Keras, PyTorch allows you to train your models in distributed environments and on multiple GPUs.

- Auto-PyTorch allows you to take advantage of AutoML.

- PyTorch has better support for APIs in C++ and Java, unlike TensorFlow/Keras.

- PyTorch has a large ecosystem of additional frameworks built on top of it, such as Skorch, which provides full Scikit-learn compatibility for your Torch models.

Disadvantages

- The API is not as simple as Keras and a little tougher to use.

- Auto-PyTorch is arguably not as sophisticated as AutoKeras.

Caffe

Caffe, which stands for Convolutional Architecture for Fast Feature Embedding, is a deep learning framework that was developed and released by researchers at UC Berkeley in 2013. It was originally developed in C++ but also features a Python interface. Caffe was designed with expressibility and speed in mind and is geared towards computer vision applications. However, as of 2020, it is outdated as a standalone framework since Facebook created Caffe2 to extend the capabilities of Caffe and then later merged Caffe2 into PyTorch.

Features

- Caffe is extremely fast. In fact, with a single Nvidia K40 GPU, Caffe can process over 60 million images per day.

- The Caffe Model Zoo features many pre-trained models that can be reused for different tasks.

- Caffe has great support for it’s C++ API.

Advantages

- Caffe is really fast, and some benchmark studies have shown that is even faster than TensorFlow.

- Caffe was designed for computer vision applications.

Disadvantages

- The documentation is not as easy to follow compared to Keras and PyTorch.

- The community is not as big as the ones for Keras and PyTorch.

- The API for Caffe is not as beginner-friendly as the ones for Keras and PyTorch.

- Caffe is great for computer vision applications but not suited for NLP applications involving architectures such as recurrent neural networks.

- As a framework, it is outdated in 2020 and not as popular as Keras or PyTorch.

MXNet

Apache MXNet is a deep learning library created by the Apache Software Foundation. It supports many different languages and is supported by several cloud providers such as Amazon Web Services (AWS) and Microsoft Azure. Amazon also chose MXNet as the top deep learning framework at AWS.

Features

- MXNet is flexible and supports eight different languages, including Python, Scala, and Julia.

- Like Keras and PyTorch, MXNet also offers multi-GPU and distributed training.

- MXNet offers flexibility in terms of deployment by letting you export a neural network for inference in different languages.

- Like PyTorch, MXNet also has a large ecosystem of libraries used to support its use in applications such as computer vision and NLP.

Advantages

- MXNet offers flexibility for model deployment because it supports many different languages and allows you to export models for use with different languages.

- MXNet offers multi-GPU and distributed training like Keras and PyTorch.

- MXNet also has a large ecosystem of libraries that support it.

- MXNet has a large community that includes users interacting with its GitHub repository, forums on its website, and a slack group.

Disadvantages

- The MXNet API is still more complicated than that of Keras.

- MXNet is similar to PyTorch in terms of syntax but lacks some of the functions that are present in PyTorch.

Overall Comparison

The two frameworks that are the most popular (and for good reasons) are TensorFlow/Keras and PyTorch. Overall, for deep learning applications in general, these are arguably the best frameworks to use. Both frameworks offer a balance between high-level APIs and the ability to customize your deep learning models without compromising on functionality. I am personally a fan of Keras and if I had to choose between PyTorch and Keras I would choose Keras as the best overall deep learning framework. However, PyTorch is definitely not far behind and MXNet is also a decent option to consider.

Best for Beginners

Keras is easily the best framework for beginners to start with. The API is extremely simple and easy to understand. There is a reason why François Chollet, the creator of Keras, used the phrase “deep learning for humans” to describe his library. If you are a beginner just getting started with deep learning or even an experienced deep learning practitioner who wants to quickly put together a deep learning model in just a few lines of code, I would recommend starting with Keras.

Best for Advanced Research

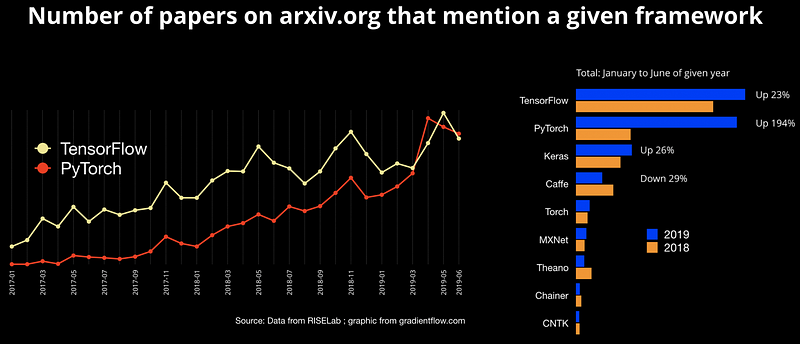

In advanced research involving deep learning, especially where the goal is to design new architectures or come up with novel methods, the ability to improvise and create highly customized neural networks is extremely important. For this reason, PyTorch, which offers a large ecosystem of additional libraries that support it as well as the ability to extend and customize its modules, is gaining popularity in research. Consider the graph below in which PyTorch achieved a 194 percent increase in arxiv.org paper mentions in the given period from 2018 to 2019. Compare this statistic to the 23 percent increase in mentions for TensorFlow and it is clear that PyTorch is growing faster in the research community. In fact, this data is a year old and PyTorch may have already surpassed TensorFlow in the research community as of 2020.

Summary

- TensorFlow/Keras and PyTorch are overall the most popular and arguably the two best frameworks for deep learning as of 2020.

- If you are a beginner who is new to deep learning, Keras is probably the best framework for you to start out with.

- If you are a researcher looking to create highly-customized architectures, you might be slightly better off choosing PyTorch over TensorFlow/Keras.

Sources

- M. Abadi, P. Barham, et. al., TensorFlow: A system for large-scale machine learning, (2015), 12th USENIX Symposium on Operating Systems Design and Implementation.

- F. Chollet et. al, Keras, (2015).

- A. Paszke, S. Gross et. al., PyTorch: An Imperative Style, High-Performance Deep Learning Library, (2019), 33rd Conference on Neural Information Processing Systems.

- Y. Jia, E. Shelhamer, et. al., Caffe: Convolutional Architecture for Fast Feature Embedding, (2014), arxiv.org.

- T. Chen, M. Li, et. al., MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems, (2015), Neural Information Processing Systems, Workshop on Machine Learning Systems.

- E. Bingham, J. P. Chen, et. al., Pyro: Deep Universal Probabilistic Programming, (2018), Journal of Machine Learning Research.