Understanding the hype behind this language model that generates human-like text

GPT-3 (Generative Pre-trained Transformer 3) is a language model that was created by OpenAI, an artificial intelligence research laboratory in San Francisco. The 175-billion parameter deep learning model is capable of producing human-like text and was trained on large text datasets with hundreds of billions of words.

“I am open to the idea that a worm with 302 neurons is conscious, so I am open to the idea that GPT-3 with 175 billion parameters is conscious too.” — David Chalmers

Since last summer, GPT-3 has made headlines, and entire startups have been created using this tool. However, it’s important to understand the facts behind what GPT-3 really is and how it works rather than getting lost in all of the hype around it and treating it like a black box that can solve any problem.

In this article, I will give you a high-level overview of how GPT-3 works, as well as the strengths and limitations of the model and how you can use it yourself.

How GPT-3 works

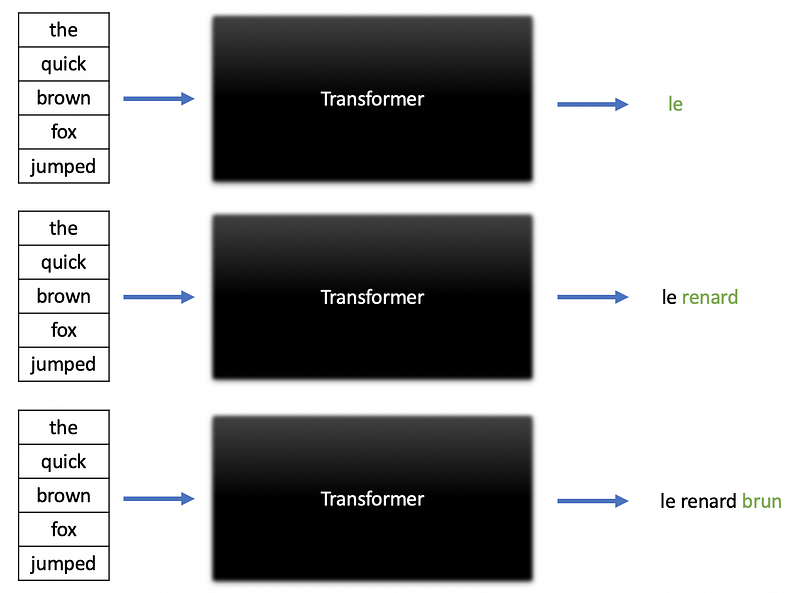

At its core, GPT-3 is basically a transformer model. Transformer models are sequence-to-sequence deep learning models that can produce a sequence of text given an input sequence. These models are designed for text generation tasks such as question-answering, text summarization, and machine translation. The image below demonstrates how a transformer model iteratively generates a translation in French given an input sequence in English.

Transformer models operate differently from LSTMs by using multiple units called attention blocks to learn what parts of a text sequence are important to focus on. A single transformer may have several separate attention blocks that each learn separate aspects of language ranging from parts of speech to named entities. For an in-depth overview of how transformers work, you should check out my article below.

https://towardsdatascience.com/what-are-transformers-and-how-can-you-use-them-f7ccd546071a

GPT-3 is the third generation of the GPT language models created by OpenAI. The main difference that sets GPT-3 apart from previous models is its size. GPT-3 contains 175 billion parameters, making it 17 times as large as GPT-2, and about 10 times as Microsoft’s Turing NLG model. Referring to the transformer architecture described in my previous article listed above, GPT-3 has 96 attention blocks that each contain 96 attention heads. In other words, GPT-3 is basically a giant transformer model.

Based on the original paper that introduced this model, GPT-3 was trained using a combination of the following large text datasets:

- Common Crawl

- WebText2

- Books1

- Books2

- Wikipedia Corpus

The final dataset contained a large portion of web pages from the internet, a giant collection of books, and all of Wikipedia. Researchers used this dataset with hundreds of billions of words to train GPT-3 to generate text in English in several other languages.

Why GPT-3 is so powerful

GPT-3 has made headlines since last summer because it can perform a wide variety of natural language tasks and produces human-like text. The tasks that GPT-3 can perform include, but are not limited to:

- Text classification (ie. sentiment analysis)

- Question answering

- Text generation

- Text summarization

- Named-entity recognition

- Language translation

Based on the tasks that GPT-3 can perform, we can think of it as a model that can perform reading comprehension and writing tasks at a near-human level except that it has seen more text than any human will ever read in their lifetime. This is exactly why GPT-3 is so powerful. Entire startups have been created with GPT-3 because we can think of it as a general-purpose swiss army knife for solving a wide variety of problems in natural language processing.

Limitations of GPT-3

While at the time of writing this article GPT-3 is the largest and arguably the most powerful language model, it has its own limitations. In fact, every machine learning model, no matter how powerful, has certain limitations. This concept is something that I explored in great detail in my article about the No Free Lunch Theorem below.

https://towardsdatascience.com/what-are-transformers-and-how-can-you-use-them-f7ccd546071a

Consider some of the limitations of GPT-3 listed below:

- GPT-3 lacks long-term memory — the model does not learn anything from long-term interactions like humans.

- Lack of interpretability — this is a problem that affects extremely large and complex in general. GPT-3 is so large that it is difficult to interpret or explain the output that it produces.

- Limited input size — transformers have a fixed maximum input size and this means that prompts that GPT-3 can deal with cannot be longer than a few sentences.

- Slow inference time — because GPT-3 is so large, it takes more time for the model to produce predictions.

- GPT-3 suffers from bias — all models are only as good as the data that was used to train them and GPT-3 is no exception. This paper, for example, demonstrates that GPT-3 and other large language models contain anti-Muslim bias.

While GPT-3 is powerful, it still has limitations that make it far from being a perfect language model or an example of artificial general intelligence

(AGI).

How you can use GPT-3

Currently, GPT-3 is not open-source and OpenAI decided to instead make the model available through a commercial API that you can find here. This API is in private beta, which means that you will have to fill out the OpenAI API Waitlist Form to join the waitlist to use the API.

OpenAI also has a special program for academic researchers who want to use GPT-3. If you want to use GPT-3 for academic research, you should fill out the Academic Access Application.

While GPT-3 is not open-source or publicly available, its predecessor, GPT-2 is open-source and accessible through Hugging Face’s transformers library. Feel free to check out the documentation for Hugging Face’s GPT-2 implementation if you want to use this smaller, yet still powerful language model instead.

Summary

GPT-3 has received a lot of attention since last summer because it is by far the largest and arguably most powerful language model created at the time of writing this article. However, GPT-3 still suffers from several limitations that make it far from being a perfect language model or an example of AGI. If you would like to use GPT-3 for research or commercial purposes, you can apply to use Open AI’s API which is currently in private beta. Otherwise, you can always work directly with GPT-2 which is publicly available and open-source thanks to HuggingFace’s transformers library.

Join My Mailing List

Do you want to get better at data science and machine learning? Do you want to stay up to date with the latest libraries, developments, and research in the data science and machine learning community?

Join my mailing list to get updates on my data science content. You’ll also get my free Step-By-Step Guide to Solving Machine Learning Problems when you sign up!

Sources

- T. Brown, B. Mann, N. Ryder, et. al, Language Models are Few-Shot Learners, (2020), arXiv.org.

- A. Abid, M. Farooqi, and J. Zou, Persistent Anti-Muslim Bias in Large Language Models, (2021), arXiv.org.

- Wikipedia, Artificial general intelligence, (2021), Wikipedia the Free Encyclopedia.

- G. Brockman, M. Murati, P. Welinder and OpenAI, OpenAI API, (2020), OpenAI Blog.

- A. Vaswani, N. Shazeer, et. al, Attention Is All You Need, (2017), 31st Conference on Neural Information Processing Systems.